Artificial Intelligence

The risk of letting junior professionals teach AI to senior colleagues

Generative AI’s broad accessibility and applicability make it a vital tool for businesses — but those traits can also cause trouble when upskilling employees.

A new study on employee upskilling finds that relying on junior workers to educate senior colleagues about emerging technology is no longer a sufficient way to share knowledge, particularly when it comes to the use of generative artificial intelligence.

Generative AI’s characteristics — specifically, its broad accessibility and applicability — are what give the technology its advantages, said MIT Sloan professor co-author of the paper “Don’t Expect Juniors to Teach Senior Professionals to Use Generative AI: Emerging Technology Risks and Novice AI Risk Mitigation Tactics.”

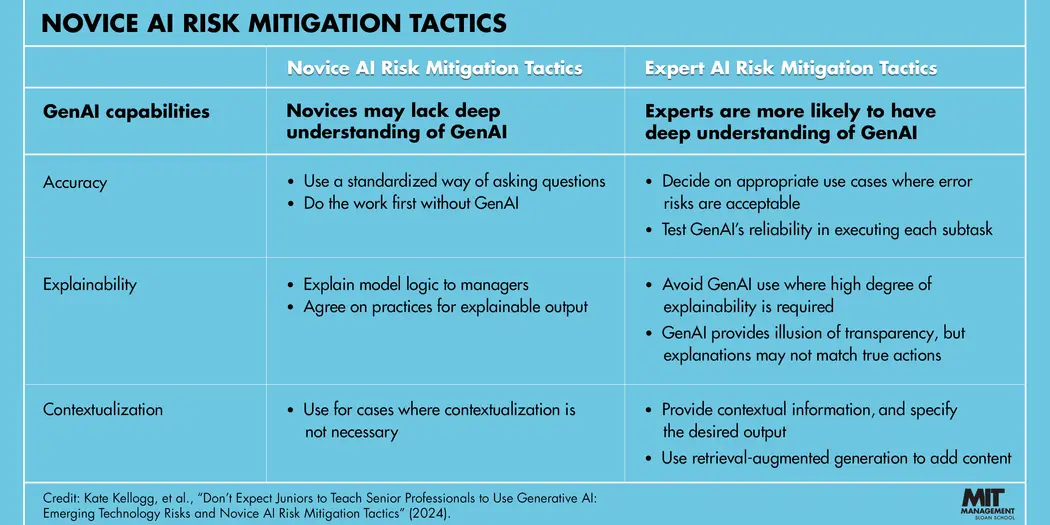

But those same features can also create traps for junior workers, preventing them from being a reliable source of expertise for more senior workers, despite an eagerness to help. The reason: Rather than offering advice like the kind generative AI experts would share with users, junior professionals tend to recommend what Kellogg and her co-researchers call “novice AI risk mitigation tactics.”

These novice tactics “are grounded in a lack of deep understanding of the emerging technology’s capabilities, focus on change to human routines rather than system design, and focus on interventions at the project level rather than system deployer or ecosystem level,” the researchers write.

While it’s important for leaders to encourage enthusiasm in junior employees, “you are still the leader; you’re responsible, you’re accountable,” said Warwick Business School professor Hila Lifshitz-Assaf. It’s up to senior leaders to better understand how generative AI works and how to use it.

Lifshitz-Assaf and Kellogg are part of the multidisciplinary team of scholars behind the research and related paper. Their co-authors include Steven Randazzo of Warwick Business School; Ethan Mollick, SM ’04, PhD ’10, of the Wharton School; Fabrizio Dell’Acqua and Edward McFowland III of Harvard Business School; François Candelon of Boston Consulting Group; and Karim R. Lakhani of Harvard Business School.

The three traps

Tapping junior employees to teach senior employees about a new technology has worked well in the past, Kellogg said. Junior employees tend to be newer hires and are expected to experiment with tools and learn about their capabilities. Newer workers also don’t have behavior ingrained around the older technologies that an organization uses.

But the speed at which generative AI is developing, as well as its expanding availability, is impacting that flow of knowledge between junior and senior workers, according to the research.

In July and August of 2023, the researchers interviewed 78 junior consultants after they’d participated in a field experiment in which they were given access to generative AI — in this case, GPT-4 — to solve a business problem (identifying channels and brands that would help a fictional retail apparel company improve revenue and profitability). The junior consultants had one to two years of experience, while senior employees were managers with five or more years of experience.

After participating in the experiment, the junior workers were asked these questions:

- Can you envision your use of generative AI creating any challenges in your collaboration with managers?

- What are some ways to deal with these challenges?

- How do you think these challenges could be mitigated?

Their answers revealed that junior professionals can fall into three traps that experts avoid when teaching and deploying AI. Those traps are:

- Lacking a deep understanding of generative AI’s accuracy, explainability, and contextualization (meaning, are answers relevant and understandable?).

- Focusing on changes to human routines rather than to system design.

- Focusing on changes at the project level rather than at the deployer or ecosystem level.

For example, according to some of the anonymized answers recorded in the paper, the junior consultants tried to address generative AI’s output inaccuracy by recommending that workers use AI to augment human-created material rather than using AI to create it from scratch. Generative AI experts, on the other hand, recommend that leaders decide appropriate use cases for the technology where “error risks are acceptable.”

In another case, the surveyed juniors recommended changing human routines by training AI users to validate generated results. However, AI experts would be more inclined to use models that provide source links with the generated results.

The researchers offered a list of mitigation tactics to address these traps and recommended that corporate leaders ensure that junior and senior workers focus on addressing AI risks by understanding AI’s capabilities and limitations, making changes to both system design and human routines, and “intervening in sociotechnical ecosystems, rather than only at the project level.”

Lifshitz-Assaf said that while it’s beneficial for companies to have junior workers who are excited about learning, “it’s more a matter of waking up and shaking the leaders that right now are over-trusting the juniors and their ability to know what’s right.”

“You need to be focused on system design and firm-level and ecosystem-level things,” Kellogg said of organization leaders. “Make sure that this isn’t just about offering trainings but focusing on system design, pushing back with vendors, and putting things in place around data.”